Reinforcement learning from human feedback

If you’ve been following AI conversations, you’ve probably come across the acronym RLHF. Yes, it sounds like something out of an LinkedIn marketeer and a tad bit overcomplicated.

But I think it’s actually one of the simplest and most impactful ideas in modern AI. Understanding it could be a key to knowing what today’s AI can and cannot do.

What RLHF actually is

Reinforcement Learning from Human Feedback, or as the acronym speaks (RLHF), is a training method designed to align AI models with human preferences. Here’s how it works in practice:

- A model generates several possible answers.

- Human reviewers evaluate them: this one’s useful, that one’s misleading, this one sounds rude.

- The model is adjusted to produce more of what humans like, less of what they don’t.

What's the result: AI learns not only to predict words, but also to predict words in ways that are supposed to feel safe, polite, and useful.

Recommend reading more in-depth from Dave Bergman at IBM, who does a more in-depth job than I of explaining RLHF

The upside: making it usable

RLHF has clear benefits, let's not skip this part:

- Safety perspective: It reduces harmful or offensive outputs, an essential baseline for any consumer-facing system. Yes, it's not perfect, but protecting users at large should be a priority.

- Tone: Models trained with RLHF communicate in ways that feel approachable, less robotic, and more professional. That is if you're not like me and are looking for that exact thing.

- Utility: Responses become more structured, clear, and practical, which makes them easier to integrate into workflows.

Without RLHF, today’s large language models would be much less approachable, closer to unfiltered streams of probability. More suited to the advanced user than your average mom and dad GPT:er.

The limitations: Polish is not perfect nor perfection

But here’s the other side of the coin. RLHF does not make models truer. It simply makes their guesses sound smoother. That’s why we see cautious phrases like “While I cannot verify…” or “It depends…”.

In some contexts, that hedging is useful. Because it actually signals uncertainty, which I think it should. We don't need more AI mansplaining. But in others, it risks slowing people down or masking the fact that the AI is still, fundamentally, guessing and not knowing.

From a truth perspective, polish can be a double-edged sword. The answers sound more confident, but the reliability hasn’t necessarily changed. Wich in essence is what we

What can we learn for system design?

This is where RLHF becomes more than an AI buzzword—it’s a reminder about how we design advanced systems in general.

Think about it:

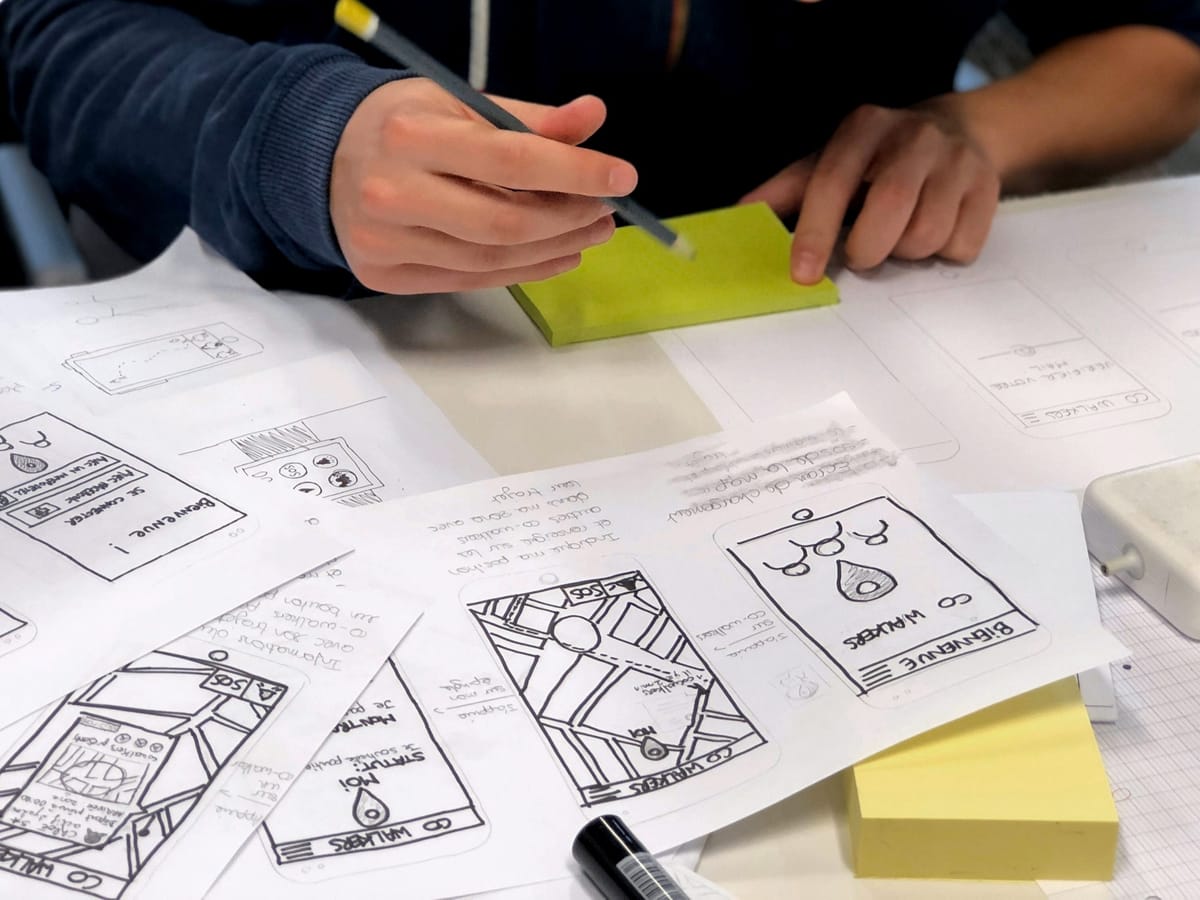

In UX design, polish can sometimes hide flaws. A beautiful interface doesn’t guarantee a usable product. The shine may distract from poor workflows or broken logic. Just as a lovable prototype is far from a finished product.

shamelessly referencing myself

In safety-critical systems, polish without reliability can be hazardous. If users trust the polish too much, they may miss the deeper risks. Even in everyday products, polish is still valuable. A clunky interface may be “true” to the underlying logic, but it won’t get adopted.

In essence, the same trade-off applies to RLHF: polish is what makes AI approachable and usable. But to the point, polish cannot replace rigorous validation and deeper testing. For designers, engineers, and product leaders, RLHF is a cautionary tale: don’t confuse smoothness with correctness. The challenge is to balance both:

"Make it lovable, but please also make it lasting."

A short takeaway

RLHF doesn’t give AI truth. It gives AI manners. It doesn’t make models more intelligent or correct; it makes them more usable for us as end users.

In some applications, that’s exactly what we need. For others, I think it risks creating a veil of confidence that isn’t backed up underneath.As with any advanced system design, the key is to ask:

What level of polish is appropriate here?

Where do we need raw honesty, even if it’s messy?

How do we prevent users from mistaking “smooth” for “reliable”?